Being a Part of This Thing Called Education

Let’s start with a philosophical question. What is the point? I think that question can be asked of just about anything we are expected to do. Go to school. Get a job. Get married. Don’t get married. Have kids. Get a pet. Learn an instrument. Have a drink. Start a blog! Well, why? Why do any of it?

All right, now that all the nihilists have sat back down let me just say that I am not a nihilist. I believe the overwhelming majority of things in life matter to a certain degree, but some things have a more pertinent point than other things. You would most likely drive yourself mad very quickly if you tried to treat every detail of your life with the same degree of extreme importance. I certainly would. Where I am beginning this blog post is with the question, “How important is formal education?”

I started thinking through this question this week after a few short readings on the subject (here, here, and an excerpt from here). As a career K12 educator, I certainly had some thoughts on the subject, as well as some Pavlovian defensive reactions. You see, it’s pretty fashionable, not to mention politically expedient, to blame education for most of the ills in our society. If that ire were directed at those setting the “education agenda” (mostly politicians) then OK. In fact, I agree that there is some change needed in top-down direction for public schools. Unfortunately, the people who get most of the blame are the educators working with students and running schools.

Now let me pause here and say two things. First, not all teachers are great at what they do. Everyone has had a bad teacher and they leave a sour taste for a long time. No one (especially other teachers) wants bad teachers in schools. That being said, please don’t let one bad teacher spoil your experiences with the other great teachers who cared for you and helped you learn. That’s like getting a bad waiter and swearing off going out to eat for the rest of your life. It’s nonsense. Second, I get why it happens. It’s easier to pick on someone who either won’t or can’t fight back in the same way they are being attacked. In schools, we call that being a bully. It also gets you in trouble. I suppose that is one of the disconnects schools have with “the real world.”

So let me take that opportunity to segway back into the idea of educational goals. That’s where I was going originally. If I were to condense those previously mentioned readings into one sentence, it would be this: There is a significant gap between what schools are teaching and what students need to be successful adults in our society. Right now, schools are focused on cramming information into students in an effort to get them to repeat that information back by correctly answering multiple choice questions (at least that’s the perception). Instead, schools should be focusing on teaching students how to be good thinkers, to solve problems, and to guide them in finding their individual path to personal fulfillment. I propose that we can think of these two constructs for educational goals as points on a spectrum. We don’t have to choose one or the other, because there is a fairly massive area in between with plenty of available real estate.

So let’s say that right now our education system is indeed currently too close to the “information acquisition” side of the spectrum (the more skeptical might call this “memorize and monetize”![]() and we need to shift more towards the “motivate to innovate” side (which a different set of skeptics might call “rainbows and unicorns”

and we need to shift more towards the “motivate to innovate” side (which a different set of skeptics might call “rainbows and unicorns”![]() . How do we get from where we are to where we’d like to be? Since this is a real problem there are many thoughts on how to do this. Here are a couple ideas worth considering and my thoughts on each.

. How do we get from where we are to where we’d like to be? Since this is a real problem there are many thoughts on how to do this. Here are a couple ideas worth considering and my thoughts on each.

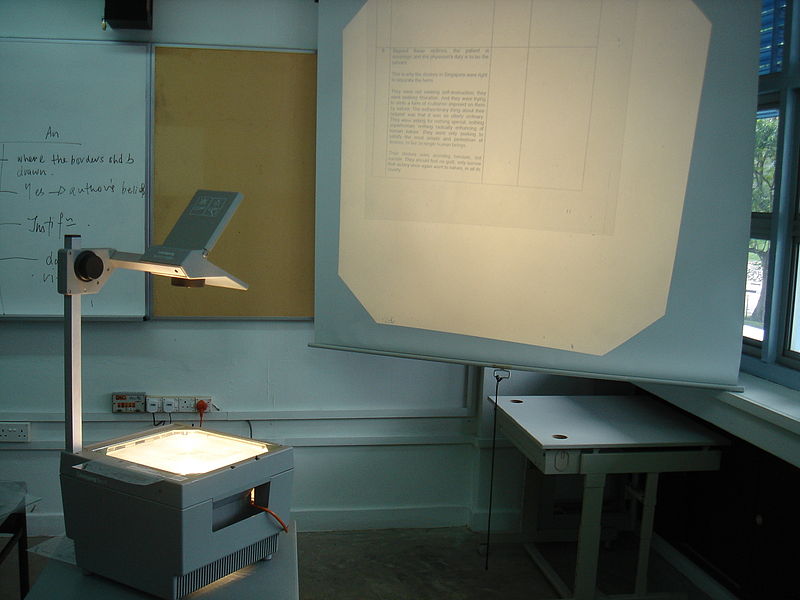

Let’s consider how a school environment would change if we moved from “information acquisition” to “motivate and innovate.” The first change I think we’d all notice is significantly fewer lectures and PowerPoints. Notice I didn’t say we’d eliminate lectures and PowerPoints. There is absolutely still a need for experts to act as experts to the benefit of students who are working on learning. Don’t agree with that? Here’s what an expert has to say on the matter. Teachers have value. If we attempt to replace teachers with systems and AI we are really doing a disservice to students (sorry Sal, but sites like yours should support the work of teachers, including being used a curriculum, but should not attempt to displace teachers).

So we still need teachers, but we need teachers who are able to help students see the value in what they are learning. Some see this as making learning fun. I think sometimes we see stories or videos of kids making their own educational video games and think of that as the “gold standard” of learning. It’s a valuable approach that can yield cool results. For full disclosure, I taught and developed curriculum for two separate Video Game Programming courses. One was more focused on using games as a tool to teach programming concepts while the other attempted to balance an understanding of gameplay concepts alongside basic programming concepts. The classes were great. Most students really enjoyed the classes. There were some in every class that didn’t.

So what happened there? Students had a fairly high degree of freedom to explore concepts in an elective class they chose to take. There was always time built in to play with the game design tools. That was intentional. Why didn’t all students engage in the course? Based on my personal interactions with each and every student in the courses I taught, the reason some students really tried and others didn’t is because some cared enough to try and others did not. Not one single student who did poorly in my class did so because he or she couldn’t grasp the content. If the student was motivated to get it then the student eventually got it. Some progressed faster than others, which is what you’d expect, but the difference between learning and not learning was student motivation.

This realization is incredibly important in the discussion of changing educational outcomes for students. Some (possibly even some authors and articles linked to in this post) see the key to improvements in education occurring as a result of increased use of situated learning and gamification practices. Both those things are great! Both can lead to improved motivation and better learning outcomes. Neither is a “magic bullet” that impacts every student. We’d like to find that, but we haven’t yet. The most impactful teaching strategy I’ve ever seen is personal relationships with students, but even that falls short of inspiring some students. There has to be some movement on the part of the student to want to learn, whether that happens through reading, listening to a lecture, participating in a simulation, or playing a video game.

Be bold with innovative instructional approaches that push students to learn in unique ways. Online communities, gaming, and project-based learning are just a few of the multitude of instructional practices that move us along the spectrum towards “motivate and innovate.” Just know that regardless of the fun and engagement you build into a course, the outcomes you want to achieve matter and there very well could be students who simply don’t buy in. That is, by far and away, the most difficult thing about being a teacher, but you can’t let it define you as a teacher or cause you to lower your expectations to include unmotivated students. Appreciate the fact the majority of your students bought into the process and continue to look for ways to help your students learn more deeply and demonstrate their learning more creatively.